Compliance in Generative AI: an overview

Have you heard of ChatGPT? A lot of entrepreneurs want to create products using generative AI. However, have they considered compliance?

ChatGPT has been gaining significant popularity in recent times, with a large number of individuals trying it out for entertainment purposes. While OpenAI and ChatGPT are often associated with AI and generative models, it's important to note that several other options are available in this realm. This post aims to assist those planning to integrate LLMs into their products. Whether you are a seasoned developer or just starting in this field, the information shared here will be of value to you.

The technology is here and improving rapidly, but building business models on top of it will be challenging.

Investors are eager to invest in AI companies. But some of the biggest winners from the AI revolution will be existing companies that weren't founded to do AI, but are perfectly poised to benefit from it, as e.g. Facebook was perfectly poised to benefit from smartphones.

— Paul Graham (@paulg) May 14, 2023

The Near Future: A Quick Peek

OpenAI & ChatGPT, Google & Bard and various open-source LLMs have been moving fast lately. In the coming years, we can anticipate a plethora of novel solutions emerging in the AI space, with multiple players vying for dominance. As AI continues to evolve, we will inevitably see increasing integration of advanced analytical and generative APIs in a wide range of products, becoming an essential industry standard. While some products may move away from the chat interface, AI will remain a fundamental component integrated through APIs.

We believe that regulatory clarity will lag behind technological advancements, posing challenges to the industry. Also, lack of regulation and excessive (=unsuitable) regulation will be an obstacle. Lack of regulatory clarity causes costs and is a barrier to entry for many. Excessive regulation is challenging as it hinders progress or makes it complicated and expensive to comply with.

The regulatory landscape of AI

When designing AI-powered services and thinking about compliance, we have to take note of several fields of law:

- Privacy / GDPR,

- Terms of Use / Consumer Protection,

- Intellectual property,

- upcoming new pieces of regulation, such as the draft EU AI Act.

Compliance with AI-powered services can be uncertain, as evidenced by ChatGPT's ban in Italy for a GDPR violation. Fortunately, OpenAI quickly responded by taking important measures to comply with GDPR, resulting in the ban being lifted by the Italian regulator. OpenAI has also issued a notice for users and non-users, describing which personal data is processed for algorithm training and the methods used. Additionally, European users now have the right to object to the processing of their personal data. OpenAI has also implemented a requirement for users to confirm their date of birth during sign-up and has blocked registration for those under thirteen years of age to comply with minimum age requirements.

Bard is not available in EU, because of GDPR and uncertainty after the recent ban in Italy of ChatGPT.

— Gianluca Brugnoli (@lowresolution) May 13, 2023

Regulations are good, but they are hampering innovation in Europe, that is falling behind in many fundamental technologies for our common future.https://t.co/XkGYgaBBZh

If you are wondering how to address the compliance challenges of AI-powered solutions, we suggest following these steps. Firstly, focus on building products that adhere to minimum and clear regulatory rules, like GDPR and Consumer Protection. It's helpful to have clear and transparent Terms of Use, which could materially reduce your legal risks. Don't get bogged down with compliance questions right from the start. Secondly, once your product gains traction and generates revenue, it's wise to involve legal experts to ensure compliance.

We expect many challenges to arise relating to IP, particularly the use of training data and the upcoming AI Act in the EU.

The EU AI Act: Are we Doomed?

The European Union (EU) has recently taken a significant step forward in the regulation of artificial intelligence (AI) as a whole, becoming the first country to do so. The draft was initially published in 2021, and the EU Parliament recently agreed on its proposal as a series of amendments (available below as a pdf). This development has naturally sparked a debate as this move will make the EU a more or less attractive place for the development of AI technologies. The regulations are founded on a risk assessment approach and are expected to have far-reaching implications, much like the General Data Protection Regulation (GDPR). It's worth noting that it will impact any organisation that offers AI solutions within the EU market. However, it's also important to recognise that regulations are still in the draft stage and have not yet become law.

I knew EU regulators would be freaking out about AI. I didn't anticipate that this freaking out would take the form of unbelievably stupid draft regulations, though in retrospect it's obvious. Regulators gonna regulate.

— Paul Graham (@paulg) May 15, 2023

As previously noted, regulations tend to impede progress, increase the cost and complexity of development, and create entry barriers. However, there is a notable shift in the AI industry, with influential figures like Elon Musk and Sam Altman urging governments to regulate AI promptly. On the other hand, some reputable thinkers such as Paul Graham and Michael Jackson have argued that proposed legislation like the EU's Act will ultimately cause harm to the region.

The EU seems hellbent on kneecapping the open source AI community and small businesses. Some of the replies to this thread are great: "Im glad they can spring into action faster on imagined risk, than they did on a real pandemic 🙄". https://t.co/zJ0A3mK83E

— Michael Jackson (@WorkMJ) May 15, 2023

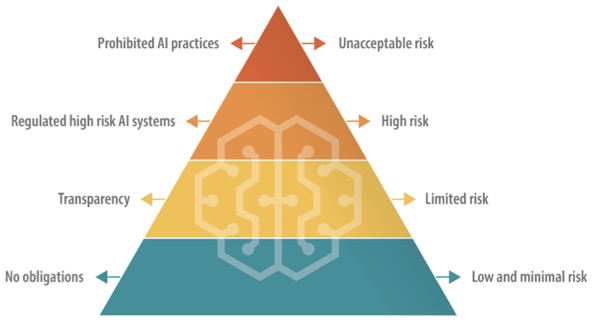

The EU Approach

The EU has taken a somewhat logical approach in drafting the AI Act. The proposal categorizes solutions according to the risks they pose to users and society. High-risk solutions will be heavily regulated or prohibited, while limited-risk solutions will be reasonably regulated. Low-risk solutions will require minimal to no regulation. This approach is sound, considering that the full extent of AI's impact on our lives is yet to be determined.

Just like how the EU was the first to define "virtual currency", it appears it will also be the first to define "artificial intelligence".

‘artificial intelligence system’ (AI system) means a machine-based system that is designed to operate with varying levels of autonomy and that can, for explicit or implicit objectives, outputs such as predictions, recommendations, or decisions that influence physical or virtual environments.

Prohibited and high-risk practices

Determining the appropriate category for your service can be challenging. We acknowledge that there may be several borderline situations, and developers will attempt to avoid having their product categorised as high-risk. If you are not sure, seek professional legal advice.

The proposal lists the prohibited practices. It is not our purpose to list all of them here also, so we are naming some of the interesting ones, such as

- using biometric categorisation,

- social scoring to decide on the treatment of these natural persons,

- assess the risk of a natural person for offending or predicting the occurrence of criminal or administrative offence based on profiling of a natural person,

- facial recognition databases through scraping of the internet or CCTV footage,

- assuming the emotions of a natural person in law enforcement.

The prohibited practices listed above are strictly forbidden. Therefore, when designing your product, ensure as soon as possible that it does not fall within any of these prohibited practices.

Then, the proposal defined the high-risk activities in a two-stage approach, firstly through a catch-all clause for AI systems and then through a closed list of activities.

Generally, all AI systems, which meet both of the following two triggers, are high-risk:

- it is meant to be used as a safety component of a product AND

- it is required to undergo a third-party conformity assessment related to risks for health and safety under the laws of the EU.

The proposal lists practices which are high-risk regardless of being or not being caught by the catch-all clause. Some of the more interesting ones are:

- biometric identification,

- assessing students and assessing candidates for admission to educational institutions,

- recruitment, screening applications, evaluating candidates, deciding on promotions or terminations,

- evaluating eligibility for public benefits and services,

- evaluating the creditworthiness of individuals, health and life insurance,

- establishing priority in dispatching emergency first response services,

- AI for use in polygraphs, evaluation of the reliability of evidence in criminal procedures, profiling of individuals in criminal cases,

- AI, used in respect of immigration procedures, verification of travel documents,

- interpreting the facts and the law in dispute resolution,

- large online platforms for recommending user-generated content.

Products that are deemed high-risk will need to adhere to the rules, such as (not a complete list):

- data governance (transparent, unbiased, record of datasets for training, pseudonymised),

- technical documentation (drawn before the product was put on the market and made available)pseudonymizedntly transparent to enable users to interpret the system's output and use it appropriately,

- obtain EU declaration of conformity,

- non-EU providers shall appoint an EU representative,

- register in the EU Database,

- inform users that they are interacting with an AI system,

- right to explanation.

Foundation model

The proposed act includes a definition for a foundation model AI:

‘foundation model’ means an AI model that is trained on broad data at scale, is designed for generality of output, and can be adapted to a wide range of distinctive tasks;

If your service falls under the category of a foundation model, you will need to abide by a series of obligations, similar to those imposed on high-risk practices but less stringent.

Dealing with the regulatory mess

Currently, you only need to worry about GDPR, IP and having clear and transparent terms of use. And you will be fine.

The proposal of the AI Act still has to go through the trialogue, where the Commission, Council, and Parliament negotiate and reconcile the final AI Act. It could be adopted before the next European elections scheduled in May 2024, but there are no guarantees. Therefore, there will be enough time to comply with it.

The content, included in this pst, was initially presented at Podim Conference in May 2023.